News

What we saw at this year's Computer Vision and Pattern Recognition Conference 2023: Part One

(Credit: twitter.com/cvpr)

Members of the Amii’s staff recently attended the Conference on Computer Vision and Pattern Recognition (CVPR), sponsored by the Computer Vision Foundation (CVF) and the Institute for Electrical and Electronics Engineers (IEEE). The CVPR conference is arguably the leading annual conference in the field of computer vision and artificial intelligence. In part one below, we discuss the team’s takeaways from the workshops, and keynote presentations. In part two we will delve deeper to highlight some recent trends and advances that caught our eyes.

Introduction and Keynote take-aways

This year, CVPR took place in Vancouver, hosting more than 10,000 registrants, the vast majority of whom attended in person. In just the second year after the shift to a primarily virtual setting due to the pandemic, the conference exhibited a mix of anticipation and mild unease. Attendees were eager to reconnect in person, overcoming some awkwardness stemming from a prolonged period of digital-only interactions. Furthermore, the researchers found themselves at a poignant crossroads of technology. While they were excited by the advancements in generative AI, they were simultaneously concerned that the escalating computational demands of these technologies might leave the academic community trailing behind.

The conference began with two intense days of concurrent tutorials and workshops covering very diverse topics, ranging from practical (e.g., self-driving cars) to theoretical (e.g. foundations of neural networks).

The well-attended Scholars and Big Models - How Can Academic Adapt workshop featured presentations by prominent researchers such as Jitendra Malik and Jon Barron. Jitendra underscored the importance of not conflating “big vs. small science” and “academia vs. industry” and offered encouraging remarks about the future role of academia in the new ecosystem of large models. Jon went further to offer specific directions (i.e., related to computer graphics) for future academic research but concluded that large models appear to offer significant advantages, putting academia at a disadvantage should they not collaborate closely with the industry.

As an applied AI researcher constantly hunting for new problems to tackle, it was refreshing to see that we are not alone in having the same concerns regarding the ever-expanding model sizes and computational requirements. The words of wisdom coming from very experienced researchers, many of whom had been through several such disruptions in the field, were very timely. However, observing the stark differences in forecasts (and proposed strategies to prepare) for the future of AI, only time will tell how the field will develop and what our roles in the future may look like.

The main conference featured three exceptional keynote speakers. On the first day, Rodney Brooks took the audience through a historical journey, citing many of his personal experiences and sharing his insights on “Revisiting Old Ideas with Modern Hardware”. To our amazement, he shared a historical example of (the all-familiar) AI hype in the form of the subtitle of one of Rosenblatt’s seminal papers published in 1958, “Introducing the perceptron - A machine which senses, recognizes, remembers, and responds like the human mind.” He reminded researchers that “Ideas come around again and again and get better results sometimes.” He ended his talk by encouraging young researchers to go beyond what’s fashionable and proposed that “cycling back to old ideas could be fruitful in the presence of massive computation.” This resonates well with my own intuition that history often holds valuable clues about the future of a field and young researchers should avoid the pitfall of over-focusing on the hottest and latest trends.

On the second day, Yejin Choi, presented an entertaining and insightful talk titled “An AI Odyssey: the Dark Matter of Intelligence”, in which she mused on the possible futures for AI research, and presented some of her refreshingly sober and rigorous work on studying the limitations of Large Language Models (LLMs) when it comes to compositionality, casting doubt as to whether the modern transformers are sufficient for achieving Artificial General Intelligence (AGI). She shared an important insight that “generation is easier than understanding for the current AI. For humans it seems to be the other way around.” We appreciated her careful and rigorous analysis of the shortcomings of the latest LLM models. Compositionality is an essential element for intelligence and establishing that the transformers might not be the right tools for the job, is an invitation to search for more promising alternatives, perhaps in the history of the field (e.g., neuro-symbolic systems) or by charting new territories.

On the final day, Larry Zitnick presented an inspiring talk on “Modeling Atoms to Address our Climate Crisis,” where he makes the case that machine vision researchers could fruitfully apply their skills to solving problems in chemistry, for instance, material discovery, new batteries, hazardous waste cleanup, etc. He presented the Open Catalyst Project, a NeurIPS competition on its third iteration that aims to encourage researchers to join forces by competing to improve the performance on the current benchmarks of a massive new open dataset. As scientists, we found this talk particularly inspiring. The prospect of developing AI models in the service of progress in science (in this case chemistry and material science), is intoxicatingly exciting. It is only relatively recently that the real applications of AI have moved beyond e-commerce and advertising to having real impact on science and engineering.

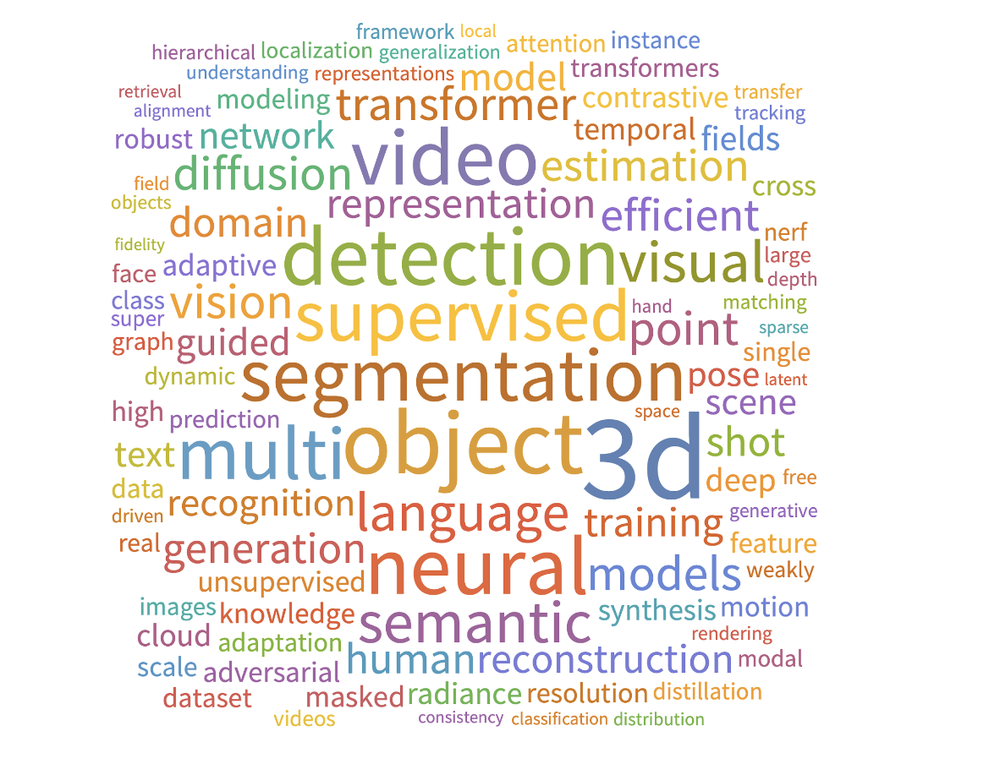

Each day included two poster sessions featuring approximately 400 posters per session! It is impossible to give an exhaustive summary of all important work presented, but in part 2 of this series we attempt to dig a little deeper to highlight the trends and identify ground-breaking work that caught our eyes.

Latest News Articles

Jul 24th 2024

News

Humans Make AI Better with Matt Taylor | Approximately Correct Podcast

How do we get the best results when AI and human beings work together? In this episode of Approximately Correct, we’re looking into Human-In-The-Loop AI with Amii Fellow and Canada CIFAR AI Chair Matt Taylor.

Jul 22nd 2024

News

Amii Monthly News - July 2024

Read our monthly update on Alberta’s growing machine intelligence ecosystem and exciting opportunities to get involved.

Jul 18th 2024

News

Empowering Founders: Amii’s Collaboration with Communitech Aims to Fuel AI Adoption

Amii announces work with Communitech, a Waterloo Region innovation hub, to empower startup founders with the AI tools and resources they need to integrate AI and build in-house capabilities successfully. The collaboration will leverage Amii’s leading AI expertise and resources and be centred around Amii’s Machine Learning Exploration (ML Exploration) program.